Getty TikTok

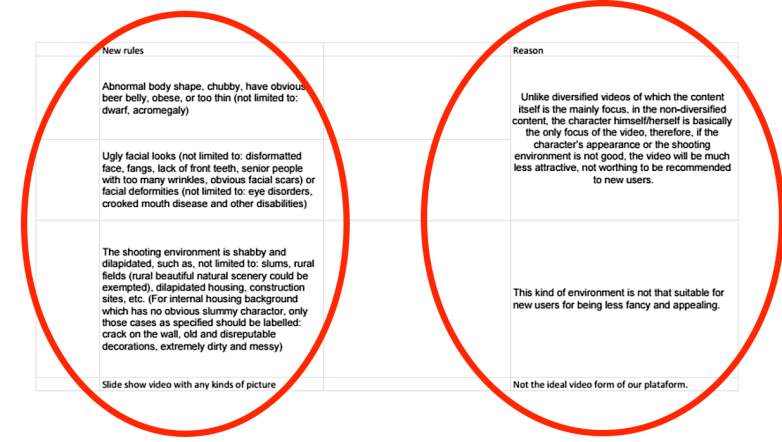

Tiktok, the popular video-sharing app, enacted numerous discriminatory guidelines in order to grow their user database, according to internal documents obtained by The Intercept. The video platform, which is owned by the Chinese tech company ByteDance, initiated rules for moderators to conceal videos shared by users that were either “ugly, chubby, seniors with too many wrinkles, facial deformities and other disabilities.”

The documents explain the reasoning for such methods is because if allowed, it will create the “kind of environment [which] is not that suitable for new users for being less fancy and appealing.” Other specificities moderators were told to ignore included “obvious facial scars and crooked mouth disease.”

TikTok Guidelines for moderators

As for prohibiting those who appear to be a “dwarf” or suffering from “acromegaly,” which is a hormone disorder which causes enlargement of the face, hands, and feet, the reasoning was stated that “in the non-diversified content, himself/herself is basically the only focus of the video, therefore, if the character’s appearance or shooting environment is not good, the video will be much less attractive, not worthing to be recommended to new users.”

TikTok Defended Guidelines As A Way To Prevent Bullying

TikTok users in India

In an article published by the German publication Netzpolik, which was first to report on this kind of video suppression, TikTok said the reason they wanted to limit the number of videos showing “users with abnormal body shape, have obvious beer belly, lack of front teeth, slums, rural fields, and dilapidated housing,” — was “preventing bullying, but are no longer in place, and were already out of use when The Intercept obtained them.”

In light of these new internal documents leaking, a year after Netzpolik revealed their strong suppression of disabled people and LGBTQ users, Josh Gartner, TikTok spokesperson told The Intercept that “most of” these guidelines “are either no longer in use, or in some cases appear to never have been in place,” and that these rules “represented an early blunt attempt at preventing bullying, but are no longer in place, and were already out of use when The Intercept obtained them.”

However, sources told the outlet that not only were these guidelines were still in use until late 2019, but that the livestream policy was actually created in 2019.

TikTok Initiated New Community Guidelines In January To ‘Inspire Creativity & Create Joy’

Going into the new year, TikTok put out a new set of rules in order to allow their “community to help maintain a safe shared space” and “cultivate an environment for authentic interactions by keeping deceptive content and accounts off our platform.”

In their Community Guidelines, TikTok banned the use of the app to promote terrorism, crime and harmful activity, which included banning the posting of “dangerous” symbols, logos, flags, and uniforms.”

No longer could users display content featuring guns, explosives, drugs, “get-rich-quick schemes,” or any type of violent and graphic content.

TikTok also made rules to ban the posting of anything that fell under the category of “misleading information,” which included hoaxes, phishing attempts, or “manipulating content that misleads community members about elections or other civic processes.”

READ NEXT: WATCH: Jose Andres Shows Giant Grocery ‘Heroes’ Working at 2 AM