Getty Timnit Gebru

Timnit Gebru, who helped lead Google’s Ethical Artificial Intelligence team, says she was fired over an email chastising the way the company treats workers of color and women, as well as conflict over a research paper that she says was censored by the tech giant.

“We know these corporations are not for Black women,” she wrote on Twitter, where she has received a flood of support. The internal email, which raised questions about censorship of the research paper, was considered by Google to be “inconsistent with the expectations of a Google manager,” according to Platformer.

In an open letter published on Medium.com, some Google employees have criticized the termination as “unprecedented research censorship.”

“We, the undersigned, stand in solidarity with Dr. Timnit Gebru, who was terminated from her position as Staff Research Scientist and Co-Lead of Ethical Artificial Intelligence (AI) team at Google, following unprecedented research censorship,” the letter says. “We call on Google Research to strengthen its commitment to research integrity and to unequivocally commit to supporting research that honors the commitments made in Google’s AI Principles.”

Here’s what you need to know:

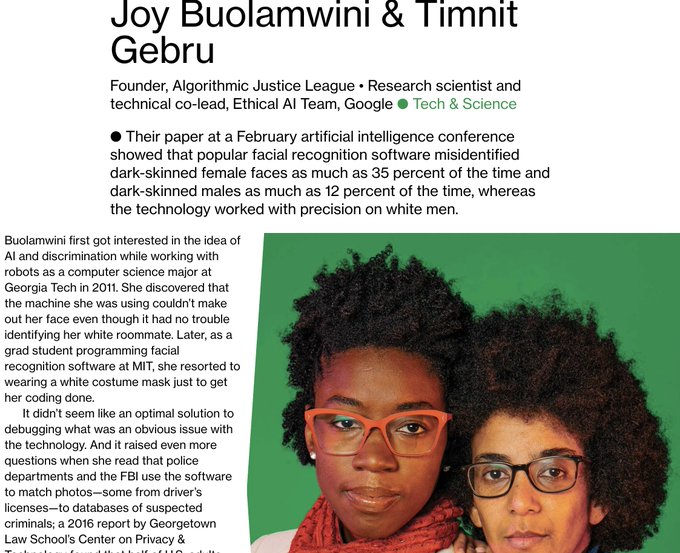

1. Gebru, a Stanford Alum, Previously Did a Landmark Study on Racial Bias in Facial Recognition Software

According to Bloomberg, Gebru is “an alumni of the Stanford Artificial Intelligence Laboratory” and “one of the leading voices in the ethical use of AI.”

Bloomberg reports that she is “well-known for her work on a landmark study in 2018 that showed how facial recognition software misidentified dark-skinned women as much as 35% of the time, whereas the technology worked with near precision on White men.”

A 2018 article published on LinkedIn called her a “Techno-Activist Addressing Bias in Artificial Intelligence.”

“Gebru, who has a PhD from the Stanford Artificial Intelligence Laboratory, is at the forefront of many of the efforts to correct this glaring flaw in the critically important field of AI,” that article explained. “Whether it be researchers working on data sets, the creation of standards and guidelines for machine learning or the fairness and transparency with which we go about training computers to create algorithms, Gebru is taking on some big challenges.”

A 2018 article in MIT Technology Review wrote that Gebru was “part of Microsoft’s Fairness, Accountability, Transparency, and Ethics in AI group, which she joined last summer. She also cofounded the Black in AI event at the Neural Information Processing Systems (NIPS) conference in 2017 and was on the steering committee for the first Fairness and Transparency conference.”

She told MIT Technology Review, “We are in a diversity crisis for AI. In addition to having technical conversations, conversations about law, conversations about ethics, we need to have conversations about diversity in AI. We need all sorts of diversity in AI. And this needs to be treated as something that’s extremely urgent.”

She also told the publication that it was difficult to be a Black woman in the field, saying, “It’s not easy. I love my job. I love the research that I work on. I love the field. I cannot imagine what else I would do in that respect. That being said, it’s very difficult to be a black woman in this field. When I started Black in AI, I started it with a couple of my friends. I had a tiny mailing list before that where I literally would add any black person I saw in this field into the mailing list and be like, ‘Hi, I’m Timnit. I’m black person number two. Hi, black person number one. Let’s be friends.'”

2. The Letter From Colleagues Slams Google’s Diversity

Until December 2, 2020, the letter states, “Dr. Gebru was one of very few Black women Research Scientists at the company, which boasts a dismal 1.6% Black employees overall. Her research accomplishments are extensive, and have profoundly impacted academic scholarship and public policy. Dr. Gebru is a pathbreaking scientist doing some of the most important work to ensure just and accountable AI and to create a welcoming and diverse AI research field.”

The letter continues:

Instead of being embraced by Google as an exceptionally talented and prolific contributor, Dr. Gebru has faced defensiveness, racism, gaslighting, research censorship, and now a retaliatory firing. In an email to Dr. Gebru’s team on the evening of December 2, 2020, Google executives claimed that she had chosen to resign. This is false. In their direct correspondence with Dr. Gebru, these executives informed her that her termination was immediate, and pointed to an email she sent to a Google Brain diversity and inclusion mailing list as pretext.

The contents of this email are important, the letter states.

In it, Dr. Gebru pushed back against Google’s censorship of her (and her colleagues’) research, which focused on examining the environmental and ethical implications of large-scale AI language models (LLMs), which are used in many Google products. Dr. Gebru and her colleagues worked for months on a paper that was under review at an academic conference. In late November, nearly two months after the piece had been internally reviewed and approved for publication through standard processes, Google leadership made the decision to censor it, without warning or cause. Dr. Gebru asked them to explain this decision and to take accountability for it, and for their lackluster stand on discriminatory and harassing workplace conditions. The termination is an act of retaliation against Dr. Gebru, and it heralds danger for people working for ethical and just AI — especially Black people and People of Color — across Google.

The letter issued a series of demands, including commitment to academic freedom.

3. Gebru the Daughter of an Engineer, Wrote on Twitter That She Was Fired Because of the Email, Adding ‘They Can Come After Me’

Gebru told the world she was fired on Twitter.

“I was fired by @JeffDean for my email to Brain women and Allies. My corp account has been cutoff. So I’ve been immediately fired :-)” she wrote on Twitter. (Dean’s Twitter profile describes him as “Senior Fellow & SVP, Google AI (Research and Health). Co-designer/implementor of software systems like @TensorFlow, MapReduce, Bigtable, Spanner.”

According to Platformer, Dean, the head of Google research, claimed to employees that Gebru “had issued ultimatum and would resign unless certain conditions were met.”

“I would post my email here but my corp account has been cutoff. I feel bad for my teammates but for me its better to know the beast than to pretend. @negar_rz didn’t even have any idea things had escalated this quickly.”

She added, “I need to be very careful what I say so let me be clear. They can come after me. No one told me that I was fired. You know legal speak, given that we’re seeing who we’re dealing with. This is the exact email I received from Megan who reports to Jeff. Who I can’t imagine would do this without consulting and clearing with him of course.”

She then wrote “So this is what is written in the email:” and included the following:

“Thanks for making your conditions clear. We cannot agree to #1 and #2 as you are requesting. We respect your decision to leave Google as a result, and we are accepting your resignation. However, we believe the end of your employment should happen faster than your email reflects because certain aspects of the email you sent last night to non-management employees in the brain group reflect behavior that is inconsistent with the expectations of a Google manager. As a result, we are accepting your resignation immediately, effective today. We will send your final paycheck to your address in Workday. When you return from your vacation, PeopleOps will reach out to you to coordinate the return of Google devices and assets.”

Gebru also wrote, “Apparently my manager’s manager sent an email my direct reports saying she accepted my resignation. I hadn’t resigned—I had asked for simple conditions first and said I would respond when I’m back from vacation. But I guess she decided for me :) that’s the lawyer speak.”

On November 30, Gebru wrote on Twitter, “Is there anyone working on regulation protecting Ethical AI researchers, similar to whistleblower protection? Because with the amount of censorship & intimidation that goes on towards people in specific groups, how does anyone trust any real research in this area can take place?”

In a Medium.com article, Gebru described her background.

“My dad was an electrical engineer, and my two sisters were also electrical engineers. It was kind of destiny. When I was growing up, I really liked math and physics, but because my dad was an electrical engineer, it seemed like a natural thing for me to pursue,” the article says.

“When I entered college, I was planning on double majoring in electrical engineering and music, but I found that I didn’t like some of the classes. I worked at Apple for a few years doing analog circuit design. Then I got a master’s and got even deeper into the hardware side of things.”

She added, “I started — and eventually left — a PhD working on optical coherence tomography because I didn’t like it and I felt very isolated. Instead of being really interested in making physical devices like interferometers, I became interested in computer vision. During that time, I took an image processing class and started to get interested in computer vision. That sparked my decision to pursue my PhD in computer vision [advised by Fei-Fei Li, AI4ALL’s co-founder].”

4. People Rushed to Gebru’s Support

Support for Gebru flooded Twitter. “@timnitGebru is the best manager I’ve had. This is unreal,” wrote one person.

“We’re seeing – IN REAL TIME – exactly how aggressively these tech companies move against Black women. Do not look away from this. If you’re at Google now please speak up about this in any way you can – especially externally if you have the safety to do so,” wrote Ifeoma Ozoma, founder of Earthseed.

Natasha Jaques, a research scientist at Google, wrote, “I’m so sorry to see this. Timnit’s research is incredibly important to our field and deserves recognition and support.”

Wrote Jade Abbott, a staff engineer for Team Retro Rabbit, “I have few small hopes for big tech. One was that Google continued to support the vital work of @timnitGebru. It indicated a willingness for hard self-reflection on their outputs – A system that was successfully fighting itself & improving as a result.”

Abeba Birhane, a Ph.D student wrote, “Just like that, Google lost the little legitimacy it had. @timnitGebru has been nothing but the ideal role model for Black women, an aspiration, and a towering figure that keeps pushing the whole field of AI ethics to respectable standard.”

There have been many similar comments.

5. The Research Paper Raised Concerns That an AI Tool Could Replicate Hate Speech

The research paper, which has three other co-authors, “examined the environmental and ethical implications of an AI tool used by Google and other technology companies,” NPR reported.

According to NPR, the paper examined a tool that “scans massive amounts of information on the Internet and produces text as if written by a human.” Gebru’s paper raised concern that it could end up replicating hate speech online.

Gebru claims Google bosses asked her to retract it. She then turned to an internal email list to criticize Google, for “silencing marginalized voices,” according to NPR, and for not giving her a clear explanation as to why the paper was censored.

Platformer obtained Gebru’s email in full as well as Dean’s response. You can read them here.

Her email said in part, “our life gets worse when you start advocating for underrepresented people, you start making the other leaders upset when they don’t want to give you good ratings during calibration. There is no way more documents or more conversations will achieve anything.”

The email added, “Silencing marginalized voices like this is the opposite of the NAUWU principles which we discussed. And doing this in the context of ‘responsible AI’ adds so much salt to the wounds. I understand that the only things that mean anything at Google are levels, I’ve seen how my expertise has been completely dismissed. But now there’s an additional layer saying any privileged person can decide that they don’t want your paper out with zero conversation. So you’re blocked from adding your voice to the research community—your work which you do on top of the other marginalization you face here.”

In his own email to employees, also published on Platformer, Dean wrote, in part, “Unfortunately, this particular paper was only shared with a day’s notice before its deadline — we require two weeks for this sort of review — and then instead of awaiting reviewer feedback, it was approved for submission and submitted.”

He said the paper didn’t meet Google’s “bar” for publication. “It ignored too much relevant research — for example, it talked about the environmental impact of large models, but disregarded subsequent research showing much greater efficiencies. Similarly, it raised concerns about bias in language models, but didn’t take into account recent research to mitigate these issues,” Dean’s email said.

He added, “Timnit responded with an email requiring that a number of conditions be met in order for her to continue working at Google, including revealing the identities of every person who Megan and I had spoken to and consulted as part of the review of the paper and the exact feedback. Timnit wrote that if we didn’t meet these demands, she would leave Google and work on an end date. We accept and respect her decision to resign from Google.”

READ NEXT: Wife Shoots D.C. Police Detective Husband After Sharing Wedding Video, Police Say