Getty Facebook is now warning users about extremist content.

Facebook has added a new warning to its social media service. Some users are noticing a warning popping up on their screen telling them that they “may have been exposed to harmful extremist content recently.”

The Message Warns Users They May Have Been Exposed to Extremist Content, But Often Doesn’t Reveal What Prompted the Warning

Many Facebook users commenting on a new warning popping up on their screen informing them that they may have been exposed to extremist content.

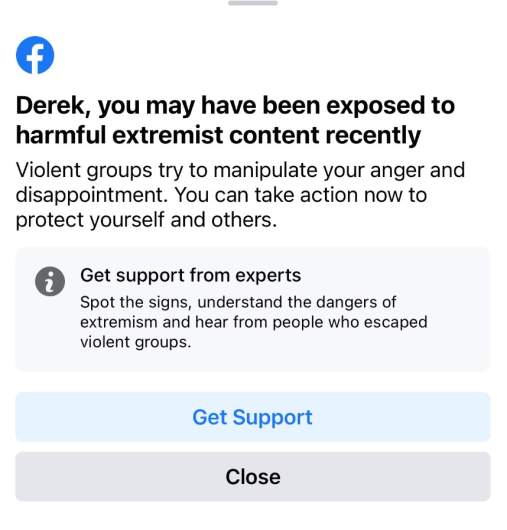

Heavy has been notified by multiple people who received the warning. For some, the warning did not add any information about why it had been given. Below is a screenshot from the warning, provided by a family member. The warning appears to be identical for each person who receives it, according to others who have also been posting about getting the warning.

The warning begins with a headline that reads: “You may have been exposed to harmful extremist content recently.” Then it follows with: “Violent groups try to manipulate your anger and disappointment. You can take action now to protect yourself and others.”

One person on Facebook noted that they received the warning every time they pulled up a story about ExxonMobil lobbying. However, other users are noting that the warning sometimes doesn’t include any indication of what content prompted the warning, or even what user, Facebook page, or group created the content that prompted the warning.

Some users are noting that the warning appears every time they open the Facebook mobile app.

The Warning Is Followed by a Link for Getting Support, But Not Everyone Can View the Link

The warning is followed by a link on how to “Get support from experts” including how to “spot the signs, understand the dangers of extremism and hear from people who escaped violent groups.”

Heavy followed the link provided with the warning. The link itself is actually not viewable by everyone. When Heavy clicked on the link, the headline appeared, followed by the message: “This feature isn’t available to everyone right now.”

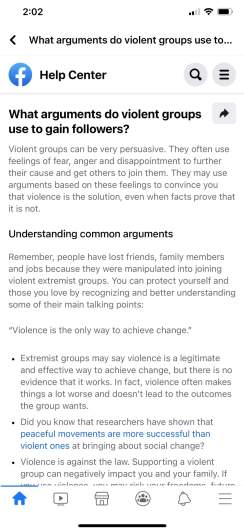

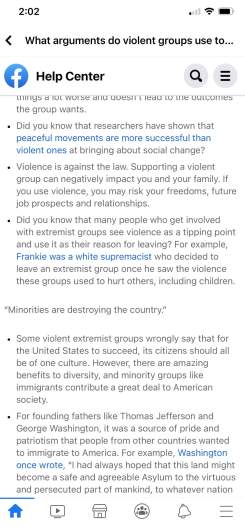

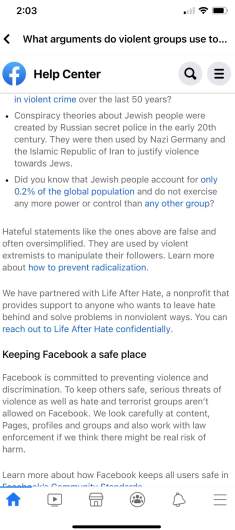

However, if you receive the warning, you can view the full page. Screenshots of the page are included below. It includes examples of arguments that violent groups use to gain followers, understanding common arguments (such as “violence is the only way to achieve change,”) and information on how to keep Facebook a safe place.

Another Version Asks if the User Is Concerned Someone Else is Becoming an Extremist

People are sharing their experiences with receiving the warning, and noting that there’s another version that asks, “Are you concerned that someone you know is becoming an extremist?”

Some people are commenting on social media that the posts feel like “George Orwell.”

Others are joking that if they haven’t received the warning that their friends may be sharing the extremist content, does that mean they are the extremist among the friends?

Many people are commenting on Twitter that they received the Facebook warning.

One person commented on Twitter, “Wasn’t it Facebook who experimented on their users by showing a portion of them only content that would make them happy and another content that would anger them to figure out which provided more engagement? Does that mean Facebook is the extremist violent group?”

Facebook did once conduct this experiment. In 2014, The Guardian reported that Facebook admitted it had done a news feed experiment testing whether it could affect emotions. The company manipulated what was shared on the newsfeeds of 689,000 people to see if it could utilize an “emotional contagion” to help people feel more positive or negative.

For the experiment, newsfeeds were filtered to reduce exposure to positive content or to reduce exposure to negative content.

The study found: “Emotions expressed by friends, via online social networks, influence our own moods, constituting, to our knowledge, the first experimental evidence for massive-scale emotional contagion via social networks.”

You can read the full study here in the Proceedings of the National Academy of Sciences.

The experiment was conducted in 2012 and users were not notified it was being done, The Guardian reported. The study said the changes were “consistent with Facebook’s data use policy, to which all users agree prior to creating an account on Facebook, constituting informed consent for this research.”